kubernetes(k8s)-v1.17.2 高可用部署笔记

基于centos 7.x 与 k8s v1.17.2 整理与部署实现;

2023-05-04 最后更新

二进制部署,请参考以下文章:

https://22v.net/article/3263/

集群需要使用系统及组件

centos 7.x

kubelet

kubeadm

kubectl

docker-ce

keepalived

haproxy

traefik

flannel

外网使用系统及组件

centos 7.x

nginx # 向外提供服务

环境介绍

内网(k8s)环境

#主机

192.168.0.80 master1

192.168.0.81 master2

192.168.0.82 master3

192.168.0.84 node1

192.168.0.85 node1

192.168.0.86 node1

#虚拟IP非主机

192.168.0.100 vip(虚拟网络的IP)

外网(nginx)环境

192.168.0.200

1.0 基础环境

所有主机

1.1 关闭selinux

$ setenforce 0

#永久关闭

$ sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

1.2 关闭swap分区或禁用swap文件

# 临时关闭

$ swapoff -a

# 注释掉关于swap分区的行

$ yes | cp /etc/fstab /etc/fstab_bak

$ cat /etc/fstab_bak |grep -v swap > /etc/fstab

1.3 修改网卡配置

$ vi /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

$ sysctl -p

1.4 启用内核参数

# 临时启用

$ modprobe -- ip_vs

$ modprobe -- ip_vs_rr

$ modprobe -- ip_vs_wrr

$ modprobe -- ip_vs_sh

$ modprobe -- nf_conntrack_ipv4

# 永久启用

$ vi /etc/sysconfig/modules/ipvs.modules

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

1.5 关闭防火墙

$ systemctl stop firewalld

$ systemctl disable firewalld

1.6 配置hosts

所有主机

$ vi /etc/hosts

192.168.0.80 master1

192.168.0.81 master2

192.168.0.82 master3

192.168.0.84 node1

192.168.0.85 node2

192.168.0.86 node3

192.168.0.100 vip

2.0 安装kubelet kubeadm kubectl

所有主机

2.1 添加Kubernetes的yum源

使用 aliyun的yum源

$ vi /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

2.2 三个一起安装

$ yum install -y kubelet kubeadm kubectl

2.3 启动kubectl

systemctl enable kubelet

systemctl start kubelet

注意:此时执行 systemctl status kubelet 查看服务状态,服务状态应为Error(255), 如果是其他错误可使用 journalctl -xe 查看错误信息。

3.0 安装doker-ce

所有主机

docker-ce的安装请查看官网文档 (Overview of Docker editions) https://docs.docker.com/install/overview/

3.1 配置docker

1.配置cgroup-driver为systemd

#查看cgroup-driver

$ docker info | grep -i cgroup

# 追加 --exec-opt native.cgroupdriver=systemd 参数

$ sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd#g" /usr/lib/systemd/system/docker.service

$ systemctl daemon-reload # 重新加载服务

$ systemctl enable docker # 启用docker服务(开机自起)

$ systemctl restart docker # 启动docker服务

#或者修改docker配置文件

$ vim /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

2.预先拉取所需镜像

# 查看kubeadm所需镜像

$ kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.17.2

k8s.gcr.io/kube-controller-manager:v1.17.2

k8s.gcr.io/kube-scheduler:v1.17.2

k8s.gcr.io/kube-proxy:v1.17.2

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.15-0

k8s.gcr.io/coredns:1.6.5

因为网络原因,使用国内的images

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.2

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.2

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

以第一个apiserver为例子:

$ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

重新命名所有

$ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2 k8s.gcr.io/kube-apiserver:v1.17.2

3.准备flannel镜像

# 网络组件

quay.io/coreos/flannel:v0.11.0-amd64

$ docker pull quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

$ docker tag quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64

$ docker rmi quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

4.0 配置高可用

在master1 master2 master3 上安装和配置 keepalived 及nginx

4.1 安装keepalived

$ yum install -y keepalived

$ systemctl start keepalived

$ systemctl enable keepalived

4.2 配置keepalived

$ /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs { # 全局配置

notification_email { # 通知邮件,可以多个

301109640@qq.com

}

notification_email_from Alexandre.Cassen@firewall.loc # 通知邮件发件人,可以自行修改

smtp_server 127.0.0.1 # 邮件服务器地址

smtp_connect_timeout 30 # 邮件服务器连接的timeout

router_id LVS_1 # 机器标识,可以不修改,多台机器可相同

}

vrrp_instance VI_1 { #vroute标识

state MASTER #当前节点的状态:主节点

interface eth0 #发送vip通告的接口

lvs_sync_daemon_inteface eth0

virtual_router_id 79 #虚拟路由的ID号是虚拟路由MAC的最后一位地址

advert_int 1 # vip通告的时间间隔

priority 100 # 此节点的优先级主节点的优先级需要比其他节点高,我配置成:master1 100 master2 80 master3 70

authentication { # 认证配置

auth_type PASS # 认证机制默认是明文

auth_pass 1111 #随机字符当密码,要和虚拟路由器中其它路由器保持一致

}

virtual_ipaddress { # vip

192.168.0.100/20 # 192.168.0.100 的vip

}

}

安装成功后在master1上能看到类似的信息

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:1c:42:fe:77:9d brd ff:ff:ff:ff:ff:ff

inet 192.168.0.80/24 brd 192.168.0.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.0.100/20 scope global eth0 # VIP

valid_lft forever preferred_lft forever

inet6 fe80::1260:cb23:3c22:7ae0/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4.3 安装haproxy

三台master机器上

$ yum install -y haproxy

4.4 启动haproxy

$ systemctl start haproxy

$ systemctl enable haproxy

4.5 配置haproxy

$ vi /etc/haproxy/haproxy.cfg

global

chroot /var/lib/haproxy

daemon

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning

pidfile /var/lib/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode tcp

retries 3

option redispatch

listen https-apiserver

bind 0.0.0.0:8443 # 此处为8443

mode tcp

balance roundrobin

timeout server 900s

timeout connect 15s

server master1 192.168.0.80:6443 check port 6443 inter 5000 fall 5

server master2 192.168.0.81:6443 check port 6443 inter 5000 fall 5

server master3 192.168.0.82:6443 check port 6443 inter 5000 fall 5

$ systemctl restart haproxy

5.0 Master节点配置

请仔细阅读在哪一台机器上执行

5.1 初始化master1

$ kubeadm init --pod-network-cidr=10.244.0.0/16 \

--kubernetes-version=v1.17.2 \

--control-plane-endpoint 192.168.0.100:8443 \

--upload-certs

参数说明

--pod-network-cidr=10.244.0.0/16 # 此IP使用flannel网络

--kubernetes-version=v1.17.2 # 使用v1.17.2

--control-plane-endpoint 192.168.0.100:8443 # 到haproxy的8443上

5.2 初始化之后的提示

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

按提示执行,完成config文件的配置

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

注意:

# 如果缺少上一步操作,在执行 `kubectl get nodes` 命令时,可能会有出下错误:

The connection to the server localhost:8080 was refused - did you specify the right host or port?

5.3 安装flannel网络插件

其他网络插件类似

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果以上文件因为网络原因不能访问,请自行科学上网复制到文件到 master1 上,然后执行:

$ kubectl apply -f kube-flannel.yml

5.4 根据提示,添加master2 master3

也可以先添加master2/master3后,再安装flannel网络插件

# master1 init完成后,会有如下提示,其中token cert是我机器上的,勿复制

# 注意此处join的是vip

$ kubeadm join 192.168.0.100:8443 --token krllh1.6iox663hvpgs1dis \

--discovery-token-ca-cert-hash sha256:380392e059d462a40db5c7b3f0190610c52172ebd4d8beaa0bbfa0261a163db9 \

--control-plane --certificate-key 0d10929e0201d0b840b57cc6cf4a71ce2e756c0bab837568d5770a91c9e2e5d6

成功后,重复5.2的步骤,确保在每一个master节点上,都能成功使用 kubectl get nodes

6.0 配置节点

6.1 加入node1 node2 node3

# 在master1节点上执行

$ kubeadm token create --print-join-command

返回如下信息:

kubeadm join 192.168.0.100:8443 --token ya3mcs.m6uya0s4lkronn1u \

--discovery-token-ca-cert-hash sha256:380392e059d462a40db5c7b3f0190610c52172ebd4d8beaa0bbfa0261a163db9

复制此信息到node1 node2 node3上分别执行

$ kubeadm join 192.168.0.100:8443 --token ya3mcs.m6uya0s4lkronn1u \

--discovery-token-ca-cert-hash sha256:380392e059d462a40db5c7b3f0190610c52172ebd4d8beaa0bbfa0261a163db9

成功后,重复5.2的步骤,确保在每一个node节点上,也能成功使用 kubectl get nodes

6.2 查看节点和pods

# 查询节点

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready master 2d v1.17.2

master2 Ready master 2d v1.17.2

master3 Ready master 2d v1.17.2

node1 Ready <none> 2d v1.17.2

node2 Ready <none> 2d v1.17.2

node3 Ready <none> 2d v1.17.2

# 查询所有的pods

$ kubectl get pods --all-namespaces -o wide

AMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6955765f44-cgkzs 1/1 Running 2 2d

kube-system coredns-6955765f44-nbvj2 1/1 Running 2 2d

kube-system etcd-master1 1/1 Running 2 2d

kube-system etcd-master2 1/1 Running 1 2d

kube-system etcd-master3 1/1 Running 1 2d

kube-system kube-apiserver-master1 1/1 Running 2 2d

kube-system kube-apiserver-master2 1/1 Running 1 2d

kube-system kube-apiserver-master3 1/1 Running 1 2d

kube-system kube-controller-manager-master1 1/1 Running 18 2d

kube-system kube-controller-manager-master2 1/1 Running 12 2d

kube-system kube-controller-manager-master3 1/1 Running 11 2d

kube-system kube-flannel-ds-amd64-685mj 1/1 Running 1 2d

kube-system kube-flannel-ds-amd64-bd4xg 1/1 Running 2 2d

kube-system kube-flannel-ds-amd64-bf9cb 1/1 Running 2 2d

kube-system kube-flannel-ds-amd64-mt4v2 1/1 Running 1 2d

kube-system kube-flannel-ds-amd64-qb57h 1/1 Running 1 2d

kube-system kube-flannel-ds-amd64-spl2h 1/1 Running 1 2d

kube-system kube-proxy-5htr9 1/1 Running 1 2d

kube-system kube-proxy-5k542 1/1 Running 1 2d

kube-system kube-proxy-dkl7d 1/1 Running 1 2d

kube-system kube-proxy-pqpbd 1/1 Running 2 2d

kube-system kube-proxy-qfdv6 1/1 Running 1 2d

kube-system kube-proxy-t8vp8 1/1 Running 1 2d

kube-system kube-scheduler-master1 1/1 Running 13 2d

kube-system kube-scheduler-master2 1/1 Running 11 2d

kube-system kube-scheduler-master3 1/1 Running 18 2d

# 查询所有服务

$ kubectl get services --all-namespaces

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d

7.0 安装traefik

在master1操作以下

7.1 IngressRoute Definition

存取这一节的yaml文件为res.yaml

$ vi res.yaml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutes.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRoute

plural: ingressroutes

singular: ingressroute

scope: Namespaced

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutetcps.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRouteTCP

plural: ingressroutetcps

singular: ingressroutetcp

scope: Namespaced

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: middlewares.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: Middleware

plural: middlewares

singular: middleware

scope: Namespaced

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: tlsoptions.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: TLSOption

plural: tlsoptions

singular: tlsoption

scope: Namespaced

---

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: traefikservices.traefik.containo.us

spec:

group: traefik.containo.us

version: v1alpha1

names:

kind: TraefikService

plural: traefikservices

singular: traefikservice

scope: Namespaced

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- traefik.containo.us

resources:

- middlewares

verbs:

- get

- list

- watch

- apiGroups:

- traefik.containo.us

resources:

- ingressroutes

verbs:

- get

- list

- watch

- apiGroups:

- traefik.containo.us

resources:

- ingressroutetcps

verbs:

- get

- list

- watch

- apiGroups:

- traefik.containo.us

resources:

- tlsoptions

verbs:

- get

- list

- watch

- apiGroups:

- traefik.containo.us

resources:

- traefikservices

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: default

7.2 Services

保存官网Services节的yaml文件,去掉 whoami这一个demo,保存为srv.yaml

$ vi srv.yaml

apiVersion: v1

kind: Service

metadata:

name: traefik

spec:

type: NodePort # 我手动添加的

ports:

- protocol: TCP

name: web

port: 8000

- protocol: TCP

name: admin

port: 8080

- protocol: TCP

name: websecure

port: 4443

selector:

app: traefik

7.3 Deployments

保存官网的Deployments节的yaml文件,去掉whoami这了节内容

$ vi deploy.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: default

name: traefik-ingress-controller

---

kind: Deployment

apiVersion: apps/v1

metadata:

namespace: default

name: traefik

labels:

app: traefik

spec:

replicas: 1

selector:

matchLabels:

app: traefik

template:

metadata:

labels:

app: traefik

spec:

serviceAccountName: traefik-ingress-controller

containers:

- name: traefik

image: traefik:v2.0

args:

- --api.insecure

- --accesslog

- --entrypoints.web.Address=:8000

- --entrypoints.websecure.Address=:4443

- --providers.kubernetescrd

- --certificatesresolvers.default.acme.tlschallenge

- --certificatesresolvers.default.acme.email=foo@you.com

- --certificatesresolvers.default.acme.storage=acme.json

# Please note that this is the staging Let's Encrypt server.

# Once you get things working, you should remove that whole line altogether.

- --certificatesresolvers.default.acme.caserver=https://acme-staging-v02.api.letsencrypt.org/directory

ports:

- name: web

containerPort: 8000

- name: websecure

containerPort: 4443

- name: admin

containerPort: 8080

7.4 应用这三个文件

$ kubectl apply -f res.yaml

$ kubectl apply -f srv.yaml

$ kubectl apply -f deploy.yaml

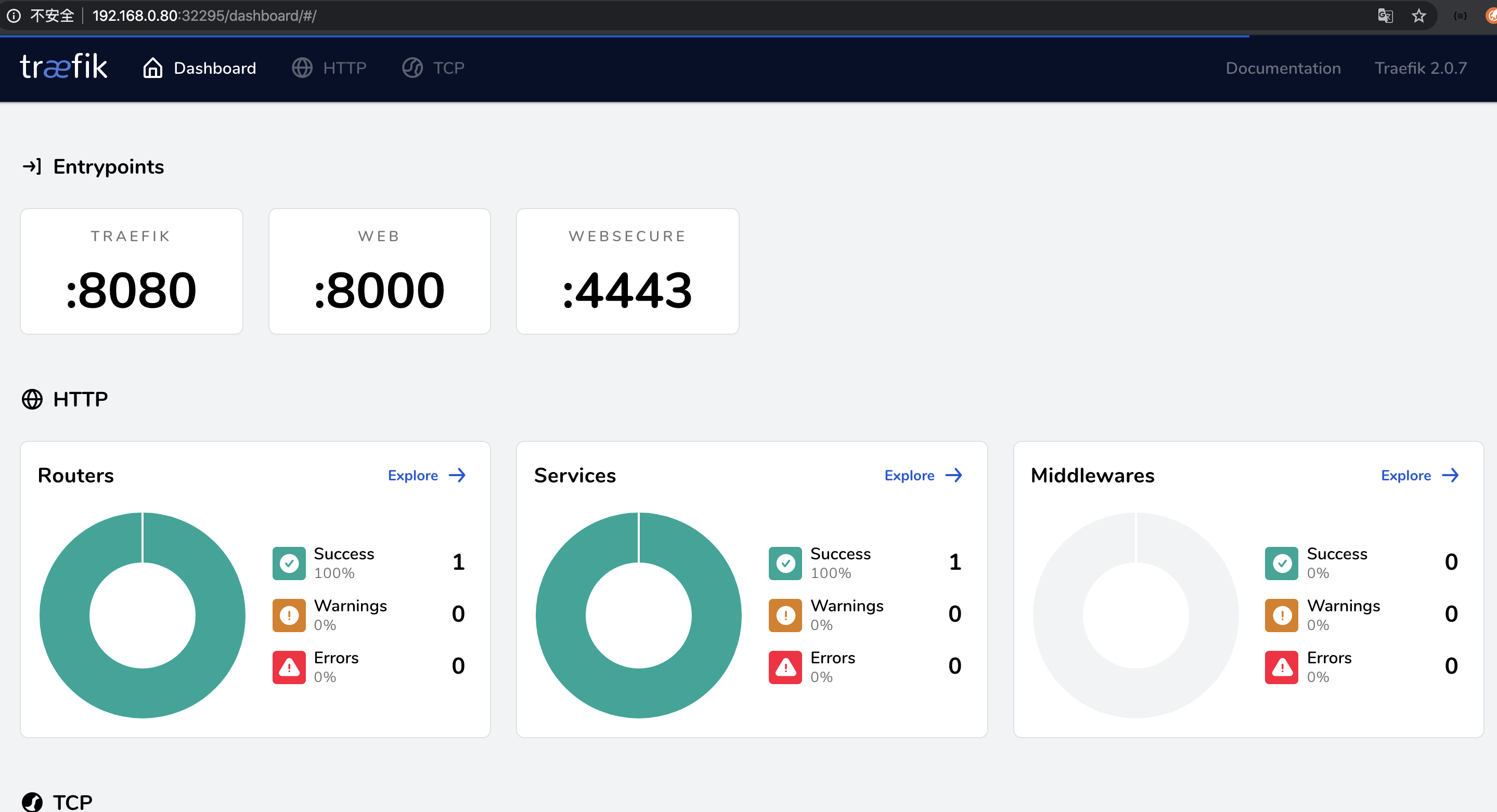

7.5 检查traefik是否安装成功

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

traefik-74dfb956c5-r9vl6 1/1 Running 1 43h

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

traefik NodePort 10.97.29.190 <none> 8000:31596/TCP,8080:32295/TCP,4443:31681/TCP 43h

其中

web port : 8000转发到了主机的:31596

admin port : 8080转发到了主机的的:32295

https port : 4443转发到了主机的的:31681

可以使用任何一台master或node的IP,访问traefik的管理界面了:

http://192.168.0.80:32295

8.0 配置外网nginx

直接给出配置文件了

在192.168.9.200上安装nginx并配置好proxy

$ vi /etc//etc/nginx/conf.d/test.api.zituo.net.conf

server {

server_name test.api.zituo.net;

listen 80; # 此处demo,未使用https,实际应用需要注意

location /{

add_header X-Content-Type-Options nosniff;

add_header X-powered-by "golang";

proxy_pass http://api;

proxy_http_version 1.1;

proxy_set_header Host $host; # 此处不能少,会转发traefik的对应域名,少了此值,不会成功;

proxy_read_timeout 3600s;

proxy_send_timeout 12s;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_buffering on;

proxy_buffer_size 128k;

proxy_buffers 8 1M;

proxy_busy_buffers_size 2M;

proxy_max_temp_file_size 1024m;

}

}

upstream api {

server 192.168.0.86:31596; # 任何内网的节点,也可以配置多个节点,31596为traefik的web端口

}

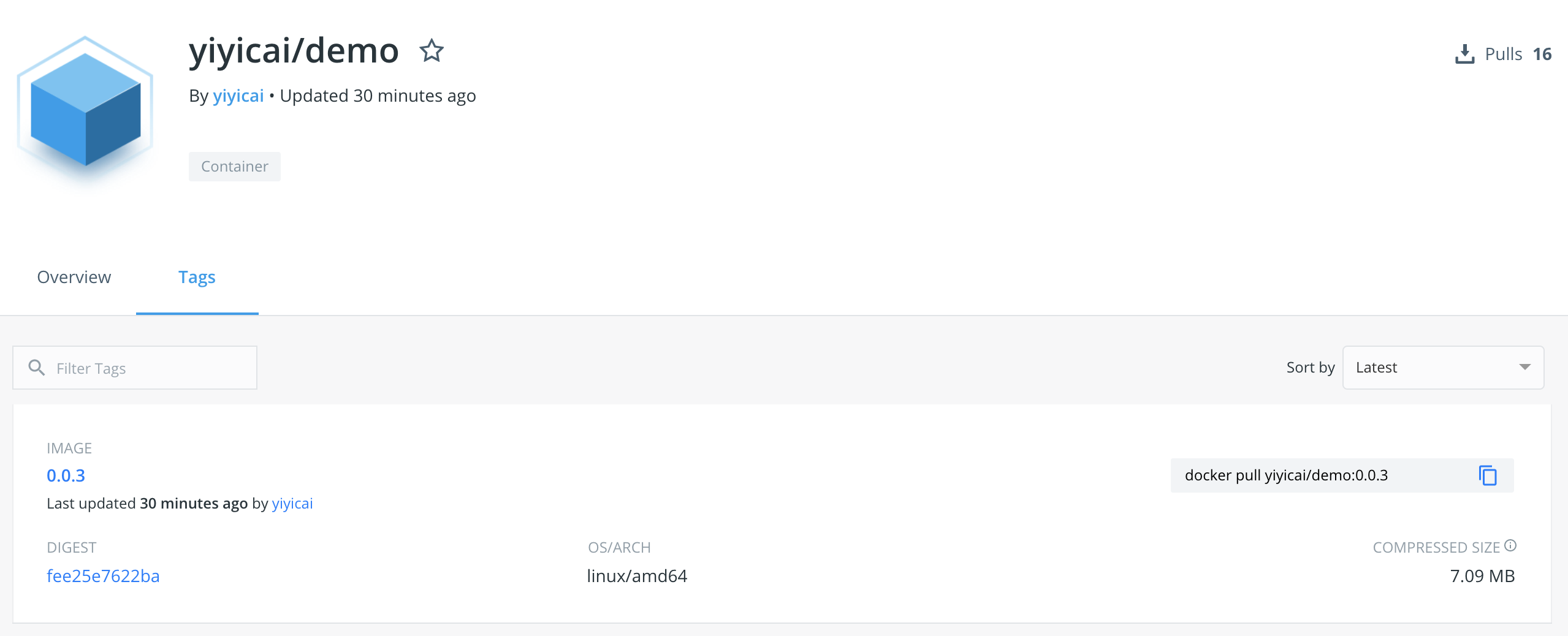

9.0 发布一个应用

发布了一个叫demo-app的应用及对应的服务,并在traefik上实现路由

9.1 发布应用

应用已经push到docker hub上 https://hub.docker.com/r/yiyicai/demo/tags

$ vi app.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default # 命名空间

name: demo-app # pod 名称

spec:

replicas: 1

selector:

matchLabels:

app: demo-app

template:

metadata:

labels:

app: demo-app

spec:

containers:

- name: demo-app # images

image: yiyicai/demo:0.0.3 # 我发布到 docker hub上的一个demo

ports:

- name: web

containerPort: 10001 # 应用使用的端口

---

apiVersion: v1

kind: Service # 服务

metadata:

name: demo-app-service

namespace: default

spec:

selector:

app: demo-app # 关联到demo-app

ports:

- name: web

port: 10001 # 服务使用的端口

protocol: TCP

$ kubectl apply -f app.yaml

执行成功后,会发现在 default 空间下有一个pod和service

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

demo-app-6db768879b-2x8sn 1/1 Running 0 6m39s

traefik-74dfb956c5-r9vl6 1/1 Running 1 2d

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demo-app-service ClusterIP 10.101.186.210 <none> 10001/TCP 43h

9.2 向traefik下添加一个路由

$ vi demo-app-router.yaml

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: demo-app-service-ingress-router

namespace: default

spec:

entryPoints:

- web

routes:

- match: Host(`test.api.zituo.net`) # 域名信息

kind: Rule

services:

- name: demo-app-service # 连接到服务

port: 10001 # 访问服务的端口

9.3 测试访问

此时,配合8.0部分的nginx,可在需要访问的hosts中写一临时解析,访问 test.api.zituo.net

$ vi /etc/hosts

192.168.0.200 test.api.zituo.net

$ curl -i http://test.api.zituo.net

TTP/1.1 200 OK

Server: nginx/1.16.1

Date: Wed, 12 Feb 2020 07:51:46 GMT

Content-Type: application/json; charset=utf-8

Content-Length: 143

Connection: keep-alive

X-Content-Type-Options: nosniff

X-powered-by: golang

{

"uid": 1,

"username": "新月却泽滨",

"mobile": "13980019001",

"address": "成都市高新区天府4街",

"created": 1581493915

}

或使用浏览器访问:

http://test.api.zituo.net

结束语

至此,k8s高可用安装及配置结束,我的一些环境:

系统:macos 10.15.2

虚拟机:Parallels Desktop (2核4G主机)

docker:19.03.5

CentOS Linux release 7.7.1908 (Core)

Linux master1 3.10.0-1062.el7.x86_64

我的联系方式:

QQ:301109640

blog:https://22v.net